Have recent global gains gone to the poor in developing countries? Or the relatively rich? An answer:

We find that with the exception of HIV prevalence, where progress has, on average, been markedly pro-rich, progress on the MDG health outcome (health status) indicators has, on average, been neither pro-rich nor pro-poor. Average rates of progress are similar among the poorest 40 percent and among the richest 60 percent.

That's Adam Wagstaff, Caryn Bredenkamp, and Leander Buisman in a new article titled "Progress on Global Health Goals: are the Poor Being Left Behind?" (full text here). The answer seems to be "mostly no, sometimes yes", but the exceptions to the trend are as important as the trend itself.

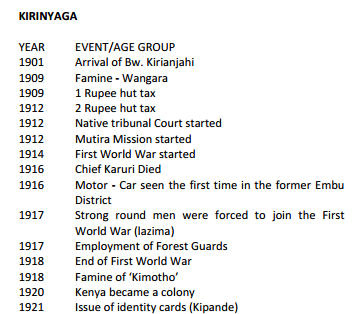

I originally flagged this article to read because Wagstaff is one of the authors, and I drew on a lot of his work for my masters thesis (which looked at trends in global health inequities in Ethiopia). One example is this handy World Bank report (PDF) which is a how-to for creating concentration indexes and other measures of inequality, complete with Stata. A concentration index is essentially a health inequality version of the Gini index: instead of showing the concentration of wealth by wealth, or income by income, you measure the concentration of some measure of health by a measure of wealth or income, often the DHS wealth index since it's widely available.

If your chosen measure of health -- let's say, infant mortality -- doesn't vary by wealth, then you'd graph a straight line at a 45 degree angle -- sometimes called the line of equality. But in most societies the poor get relatively more of a bad health outcome (like mortality) and rather less of good things like access to vaccination. In both cases the graphed line would be a curve that differs from the line of equality, which is called a concentration curve. The further away from the line of equality the concentration curve is, the more unequal the distribution of the health outcome is. And the concentration index is simply twice the area between the two lines (again, the Gini index is the equivalent number when comparing income vs. income). The relationship between the two is illustrated in this example graph from my thesis:

You can also just compare, say, mortality rates for the top and bottom quintiles of the wealth distribution, or comparing the top 1% vs. bottom 99%, or virtually any other division, but all of those measures essential ignore a large amount of information in middle of the distribution, or require arbitrary cutoffs. The beauty of concentration curves and indexes is that they use all available information. An even better approach is to use multiple measures of inequality and see if the changes you see are sensitive to your choice of measures; it's a more a convincing case if they're not.

The new Wagstaff, Bredenkamp, and Buisman paper uses such concentration indexes, and other measures of inequity, to "examine differential progress on health Millennium Development Goals (MDGs) between the poor and the better off within countries." They use a whopping 235 DHS and MICs surveys between 1990-2011, and find the following:

On average, the concentration index (the measure of relative inequality that we use) neither rose nor fell. A rosier picture emerges for MDG intervention indicators: whether we compare rates of change for the poorest 40 percent and richest 60 percent or consider changes in the concentration index, we find that progress has, on average, been pro-poor.

However, behind these broad-brush findings lie variations around the mean. Not all countries have progressed in an equally pro-poor way. In almost half of countries, (relative) inequality in child malnutrition and child mortality fell, but it also increased in almost half of countries, often quite markedly.We find some geographic concentration of pro-rich progress; in almost all countries in Asia, progress on underweight has been pro-rich, and in much of Africa, inequalities in under-five mortality have been growing. Even on the MDG intervention indicators, we find that a sizable fraction of countries have progressed in a pro-rich fashion.

They also compared variations that were common across countries vs. common across indicators -- in other words, to see whether the differences across countries and indicators were because, say, some health interventions are just easier to reach the poorest with, and found that more of the variation came from differences between countries, rather than differences between indicators.

One discussion point they stress is that it's been easier to promote equality in interventions, rather than equality in outcomes, and that part of the story is related to the quality of care that poorer citizens receive. From the discussion:

One hypothesis is that the quality of health care is worse for lower socioeconomic groups; though the poorest 40 percent may have experienced a larger percentage increase in, for example, antenatal visits, they have not observed the same improvement in the survival prospects of their babies. If true, this finding would point to the need for a monitoring framework that captures not only the quantity of care (as is currently the case) but also its quality.